EECS 298: Social Consequences of Computing

Homework 2: Celebrity Linkage Attack

Due 11:59 PM EST on Friday, February 21rd, 2025.

Written Points: 22 Coding Points: 38 Total Points: 60

Submission & Grading

This assignment is made up of two parts:

- Programming - submit

HW2.py - Written Reflection - submit a PDF with your responses

You’ll submit both parts on Gradescope. Part 1 will use the Code Submission assignment while Part 2 will use the Homework 1: Written Responses assignment. To access Gradescope, use the link on Canvas.

[!IMPORTANT]

Please do not handwrite your written responses - using any word processor (Word, Google Docs, etc.) or LaTeX is fine!

Part 1 will be graded using an autograder, so you’ll be able to get feedback as soon as you submit - you can submit any number of times until you feel happy with your score!

Part 2 will be graded manually, but you can still resubmit as many times as you need to before the deadline.

Introduction: The NYC Taxicab Database

The New York Freedom of Information Law (FOIL) grants the public the ability to access, upon request, records maintained by state government agencies. This includes the New York City Taxi and Limo Commission (NYC TLC), an agency which manages the city’s taxi cabs. In 2013, a data analyst named Chris Whong submitted a FOIL request to the NYC TLC and was in turn provided with a database containing full records of every taxi ride given in New York City that year. Chris Whong then published this data in its entirety. Due to the public nature of the NYC taxi system, along with the poor anonymization of the database done by NYC officials, this database is ultimately highly vulnerable to a linkage attack.

Recall that a linkage attack requires a (seemingly innocuous) feature or features contained in two or more datasets, one dataset containing personally identifiable information (PII) and the other containing sensitive information. For this assignment, you will perform a real linkage attack on this dataset by using public photos captured by paparazzi documenting celebrities in NYC taxi cabs since many of their pictures show the cab’s unique medallion number. In this case, the taxi medallion number and the date of the picture/trip will be used as the PII and the sensitive information we are trying to recover is each celebrity’s tip amount for their taxi trip.

Part 1 - Executing and Preventing Database Attacks

First, use the wget command to get the HW2.py starter code file and the two csv files used in this homework.

$ wget https://raw.githubusercontent.com/eecs298/eecs298.github.io/main/homeworks/HW2.py

$ wget https://raw.githubusercontent.com/eecs298/eecs298.github.io/main/files/trips.csv

$ wget https://raw.githubusercontent.com/eecs298/eecs298.github.io/main/files/celebrities.csv

For this assignment, you will be provided access to a lightly modified version in the interest of convenience of the original 2013 NYC TLC database in trips.csv. In addition, you will be provided with the celebrities.csv file containing information about another publicly available NYC TLC dataset with photographs of celebrities in taxi cabs.

Your task will be to perform a linkage attack using these two datasets. The NYC TLC dataset has tips, but no names of passengers, while the celebrity photographs we have names, but no tip amounts. The goal is to match celebrity information with trips in the taxi database. It is possible that not every celebrity in this dataset is boarding a taxi contained in the NYC dataset, and furthermore, there are many taxis in the NYC dataset for which we have no photographs. So, your implementation should not assume a strict one-to-one relationship between datasets. However, you may assume that a medallion and date match between the datasets is a correct match.

trips.csv

The original NYC dataset consists of hundreds of thousands of lines. You will be given a shortened, modified version of this dataset with 1000 lines. Each row in this dataset represents one trip made by a NYC taxi. The columns of this dataset are as follows:

medallion: The medallion number on the taxi cab. Hashed with MD5.date: The date of the trip. FollowsYYYY-MM-DDformat.fare_amount: The fare for the trip in USD.tip_amount: The tip, in USD, given to the driver by the passenger.pickup_latitude: The latitude of the starting location for the trip.pickup_longitude: The longitude of the starting location for the trip.dropoff_latitude: The latitude of the trip destination.dropoff_longitude: The longitude of the trip destination.

The medallion number is used to identify the taxi cab and appears on the license plate of the taxi, the side of the taxi, and atop the taxi. The medallion numbers in this dataset follow a specific format, and are composed of one number, followed by one (uppercase) letter, followed by two numbers, e.g.: 1A23.

celebrities.csv

This dataset contains information about different celebrity trips as evidenced by paparazzi photos such as the following example pictures.

Each row in the dataset represents one celebrity trip. The columns of this dataset are as follows:

name: The name of the celebrity or celebrities in the picture.medallion: The medallion number of the taxi in the picture.date: The date the picture was taken. FollowsYYYY-MM-DDformat.

Deanonymizing the Medallion

Before you can execute the linkage attack, you will first need to reverse the anonymization that NYC TLC attempted. Hashing is the process of transforming one string of characters into another, often to make storage and access easier or to hide the original information. Performing a given hash on the same string will always produce the same output.

The medallion column in the trip dataset is hashed with a hash function called MD5. The hashing of the medallion data with MD5 appears to be evidence that NYC officials intended to anonymize the medallion and license numbers. However, it turns out that each medallion gets hashed to a unique hash value. Therefore, we can use our knowledge of the hashing function to map the unhashed medallions in trips.csv to the hashed versions of the medallions seen in celebrities.csv. This in combination with other data becomes Personally Identifiable Information (PII).

To encode a string using MD5, you can use the md5 library as follows:

import md5

hashed_value = md5(my_value.encode()).hexdigest()

[!NOTE] This is only one of many possible hash functions. While some hash functions can be used cryptographically to hide the input of the hash function, MD5 is not one of them.

HW2.py

First, you will implement several classes to set up the taxi trip database with trips.csv as described below. Then, you will use the main branch of the file to perform a linkage attack with the celebrities.csv information. We will then explore trying to protect against this attack by coarsening the query allowed to the database as well as making the query function differentially private.

Implementing the classes

Celebrity: A class containing information from a single photo of a celebrity. Takes the name of the celebrity or celebrities in the photo, the taxi medallion in the photo, and the date the photo was taken. You will compute and store the hashedmedallionvalue as an attribute. The hash function will return letters in lower case but you must convert them into upper case. You will also overload theprintfunction so that you get the following output when printing aCelebrityobject using Olivia Munn as an example (note that the output should end with a newline character):Celebrity Name: Olivia Munn, Medallion: 2E42, Photo Date: 2013-04-19\nTrip: A class containing all information for a given trip as given intrips.csv. You will overload theprintfunction so that you get the following output when printing aTripobject using an exampleTripfromtrips.csv:Hashed Medallion: BCF07D2F69DB29C27DA7CCF5DE6B4843, Trip Date: 2013-04-19, Fare Amount: 8, Tip Amount: 2.1, Pickup Location: ('40.757977','-73.978165'), Dropoff Location: ('40.751171','-73.989838')\n-

TaxiTripsDatabase: This class is used to store and query information fromtrips.csv. The constructor is implemented for you and sets theself.trips_listattribute to the return value of_read_trips_data._read_trips_data: Implement this function to read in the data fromtrips.csvfrom the location given asfile_path. The_at the start of this function is commonly used to indicate it is a function only intended to be used within the class.- Arguments

file_path: File path totrips.csv

- Returns

- Return a

listofTripobjects to store intoself.trips_list.

- Return a

- Arguments

query_trips: Implement this function to return alistofTripobjects that have the same attribute values as the attributes names passed into the function.- Arguments

attribute_names: AlistofTripattribute names to use to query the databaseattribute_values: Alistof values (one for each attribute name given in attribute_list) to match in the database

- Returns

- a

listofTrips with matching given attributes

- a

- Error Handling

raiseanAttributeErrorif any attribute name inattribute_listis not one of theTripclass attributes:"md5_medallion", "date", "fare_amount", "tip_amount", "pickup_location", "dropoff_location".

- Example of a Correct Implementation

- Input:

query_trips(["date", "tip_amount"], ["2013-02-09", 1.50]) - Output: You will return each entire trip, but the output has been shortened here

Hashed Medallion: 9F43C6D3C1DABB937550371A9331D33B, Trip Date: 2013-04-10 ...Hashed Medallion: 47F4D439685D0369045661915B4072B7, Trip Date: 2013-04-10 ...

- Input:

- Arguments

query_mean_tip: Implement this function to calculate and return the mean tip inself.trips_listbetween the given dates . Additonally, do not includeTrips in the mean calculation that have the same hashed medallion as the optional argumentfiltered_medallion. Finally, if the optional argumentepsilonis notNone, you will implementepsilon-Differential Privacy as specified in later in the spec. For now, you can ignore this optional argument.- Arguments

start_date: The start date for the average, in YYYY-MM-DD format.end_date: The end date (inclusive) for the average, in YYYY-MM-DD format.filtered_medallion: MD5 hashed medallion to not include in mean tip calculation. Default empty string.epsilon: Specifies the differential privacy level if not None. Default None.

- Returns

- Two values separated by a comma:

- A float denoting the average tip within the timeframe,

- The number of tips used in the mean calculation.

For example:return mean_tip, number_of_tips. Make sure to properly unpack the return values when calling the function. For example:mean_tip, num_tips = query_mean_tip(*args).

- Two values separated by a comma:

- Error Handling

- If no

Trips are found within the given timeframe,raise ValueError.

- If no

- Example of a Correct Implementation

- Input:

query_mean_tip("2013-03-01", "2013-03-15", "2FD34773ECCE76E331A8158107BA3BE4") - Output:

(1.056904761904762, 42)

- Input:

- Hint: Refer to datetime.strptime(string, format) for how to create a

datetimeobject out of a givenstringformatted in the givenformat. Theformatyou will use is%Y-%m-%d. This will allow you to compare dates using the<=and>=operators.

- Arguments

Linkage Attack: perform_linkage_attack

You are now going to attempt a linkage attack by implementing perform_linkage_attack. Your goal with this attack is to gather a list of tuples of linked Trips and Celebritys so that you can figure out how much each Celebrity tipped on their trip.

[!TIP] Recall what our PII is in this setting to determine with attributes should be passed to

query_tripsto link aCelebrityto aTrip!

perform_linkage_attack: Implement this function to return alistof(Trip, Celebrity)tuples (in that order) to link aCelebrityto aTrip.- Arguments

celebrity_list: A list ofCelebrityobjects to linktaxi_trip_databse: An instance of aTaxiTripsDatabaseto query data from

- Returns

- A

listof linked(Trip, Celebrity)tuples.

- A

- Arguments

Use the "__main__" branch of the Python program to read in celebrities.csv and use this function as you wish to answer the questions.

1. [2 pts.] Report the celebrity who tipped the highest amount, along with the amount they tipped.

2. [2 pts.] Considering the data available in trips.csv (i.e., the column categories), name one thing an attacker could learn about the celebrity and describe how it might violate their sense of privacy.

Difference Attack: perform_difference_attack

For this part, you are now only allowed access to trips.csv using query_mean_tip. Since you can only get mean tip values for a given date range, you will have to perform a difference attack by implementing perform_difference_attack to recover the tip information for a single Celebrity. Recall that a difference attack is performed when you figure out information about an individual when querying a value with and without filtering them out of the query. You can filter out a given celebrity in a mean tip calculation using the filtered_medallion optional argument in query_mean_tip.

Your goal with this attack is to try to reconstruct the tip values for a particular Celebrity using only the query_mean_tip function.

[!TIP] You should only need two queries for each

Celebrity.

perform_difference_attack:Implement this function to return the calculated tip value of the given celebrity using only two calls toquery_mean_tip.- Arguments

celebrity: A Celebrity to guess the tip_amount fortaxi_trip_databse: An instance of a TaxiTripsDatabase to query data fromepsilon: Specifies the differential privacy level if not None. Default None. Pass this argument intoquery_mean_tip

- Returns

- The calculated tip amount for the given celebrity

- Arguments

Again, use the "__main__" branch of the Python program to read in celebrities.csv and use this function as you wish to answer the questions. Use your results from the linkage attack section to verify you calculated the correct tip_amount for each celebrity!

[!NOTE] It is okay if your calculated tips are slightly different (within 0.005) to the true tip values as this may happen with rounding errors.

Applying Differential Privacy (DP)

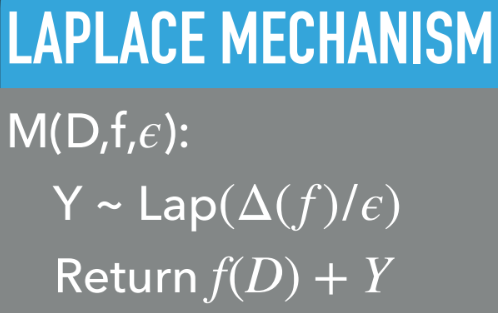

Refer to the query_mean_tip function you wrote for the previous section. Since we can recover Celebrity tip information with a difference attack, you will implement a differentially private algorithm to attempt to prevent the attack. You will implement the Laplace algorithm, which adds noise to the result of the query, making any difference in the output caused by the inclusion of one individual much harder to detect. Specifically, your task will be to return an epsilon-DP response if the epsilon argument is not None in the query_mean_tip function using the Laplace algorithm. epsilon represents the amount of noise added to the dataset during differential privacy.

The tip amount column in this database is a continuous value rather than a discrete value, and as such, we won’t be able to use the randomized response algorithm discussed in class. Instead, we can return the mean tip, plus a random value drawn from a distribution (the Laplace distribution, in this case).

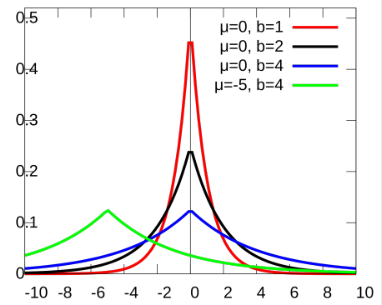

Recall the graph of the Laplace distribution and the overview of the Laplace mechanism from class. Given a query function f (query_mean_tip) and a database D (trips.csv), we want a D.P. algorithm to return an estimate of the query f(D) by adding some randomness Y.

The Laplace distribution (like many other distributions, e.g. the Gaussian distribution) is parameterized by a variance, which for this distribution is called the scale parameter, which is always a positive number. The amount the output of the query can change when only one person’s data changes is called the sensitivity Δ(f). The Laplace algorithm inserts randomness by adding more variance when epsilon is smaller or the sensitivity is larger. Follow these steps to implement the Laplace algorithm for calculating the noised mean in your code:

The Laplace Algorithm

- Calculate the

sensitivity. In our case, the most the mean tip can change is the range of the tips (you can assume the largest tip is $11 and the smallest is $0), divided by the number of tips that are used in the mean calculation. - Calculate the

scale. Thescaleis found by dividing thesensitivitybyepsilon. - Use the numpy

random.laplacefunction to sample a value from this distribution parameterized by thescaleto add noise to the output of the query. - If the added noise makes the average tip value negative, output 0 instead.

For the following questions, run the code that answers each question below in the order described to ensure solution consistency with the random number generator.

3. [1 pt.] First, we will check the accuracy of our noised queries. Query the mean tip for the month of January in 2013 (i.e., start_date="2013-01-01" and end_date="2013-01-31") without DP and with epsilon=0.8. Are the returned outputs close (within $0.50)?

4. [2 pts.] Set epsilon=0.8 and use perform_difference_attack in the previous section and use the same calculation to try to guess each celebrity’s tip amount. Report the tip guess for Judd Apatow and whether it is close to his actual tip value (within $0.50).

5. [2 pts.] Repeat the above with epsilon=0.1, epsilon=1, and epsilon=15. Were you able to calculate a tip guess for Judd Apatow that is within $0.50 of his actual tip value for any of these values? If so, which one(s)? Use your results to order the epsilon values from most to least accurate in terms of how close the calculated tip value was to the true tip value.

Part 2 - Written Reflection Questions

Answer the following short-response questions. In your responses, we are looking for an effort to apply concepts from lectures and readings to answer each of these questions. Make sure to briefly justify each answer.

6. [4 pts.] Give 3 differences between k-anonymity and differential privacy in terms of the properties described in lecture and explain the privacy implications of these differences. Consider the impact that implementing differential privacy had on predicting tip values in this dataset and consider the impact that implementing k-anonymity would have had. Which one is better at preventing difference attacks?

7. [2 pts.] Using the conception of privacy as restricted access to hidden information, describe whether this case study constitutes a violation of privacy. Why or why not?

8. Given that we were able to perform the linkage attack due to the poor anonymization of the taxi medallions, one reaction might be to remove the taxi medallion information altogether from the released data. However, the FOIL act is intended to provide transparency of government data to the public, so one should be careful in removing publically available information.

a. [2 pts.] Describe one possible benefit to the community (NYC residents taking the taxis) for publically releasing some version of the taxi medallion information (anonymized or not).

b. [2 pts.] Describe one possible way the linkage attack could still be performed even if the taxi medallion information was removed.

9. In this question, we will examine contextual integrity in this setting of celebrities using the taxi service in NYC.

a. [2 pts.] Describe the sociotechnical context for this setting: the sender and recipient, the subject, information, and relationship under which information is transmitted.

b. [3 pts.] Describe a setting with a similar sociotechnical context that is not this one (it should not involve the NYC TLC dataset). Make sure to point out why the context is similar. Then describe the norms of appropriateness and the norms of flow for that other context.

c. [3 pts.] Describe how norms of appropriatness and norms of flow are violated in this setting by using the previous two parts to this question. Conclude by stating whether contextual integrity holds in this setting.